Advanced Lane Finding

From Udacity:

The goals / steps of this project are the following:

- Compute the camera calibration matrix and distortion coefficients given a set of chessboard images.

- Apply a distortion correction to raw images.

- Use color transforms, gradients, etc., to create a thresholded binary image.

- Apply a perspective transform to rectify binary image (“birds-eye view”).

- Detect lane pixels and fit to find the lane boundary.

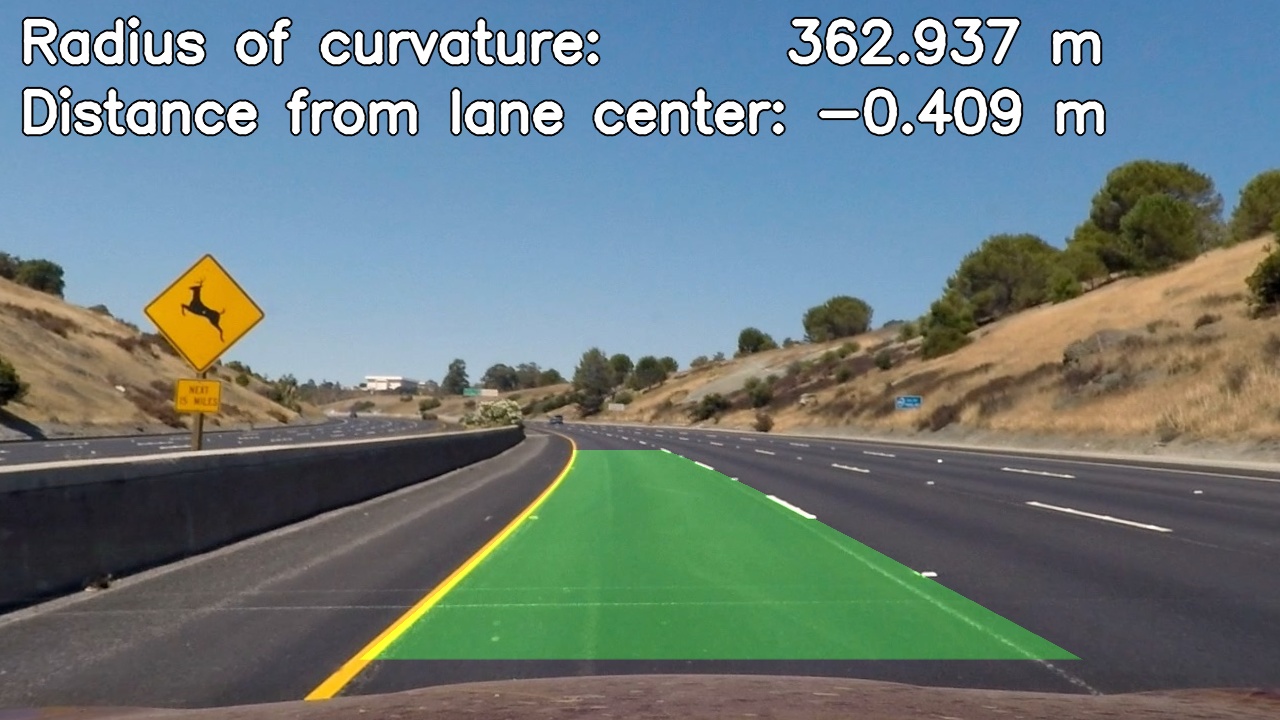

- Determine the curvature of the lane and vehicle position with respect to center.

- Warp the detected lane boundaries back onto the original image.

- Output visual display of the lane boundaries and numerical estimation of lane curvature and vehicle position.

Rubric Points

Here I will consider the rubric points individually and describe how I addressed each point in my implementation.

Writeup / README

1. Provide a Writeup / README that includes all the rubric points and how you addressed each one. You can submit your writeup as markdown or pdf. Here is a template writeup for this project you can use as a guide and a starting point.

You’re reading it!

Camera Calibration

1. Briefly state how you computed the camera matrix and distortion coefficients. Provide an example of a distortion corrected calibration image.

The code for this step can be found here: 1. Compute the camera calibration matrix and distortion coefficients given a set of chessboard images. and image.calibrate_camera()

I loaded the calibration images and converted them to grayscale. I specified that there are 9 interior corners in the x direction (nx = 9) and 6 interior corners in the y direction (ny = 6) in these calibration images. I used the function cv2.findChessboardCorners() to find these points in the images, and when they were successfully found I appended the checkerboard indices to the array objpoints and appended their corresponding pixel coordinates to the array imgpoints. I used cv2.calibrateCamera() to compute the calibration and distortion coefficients.

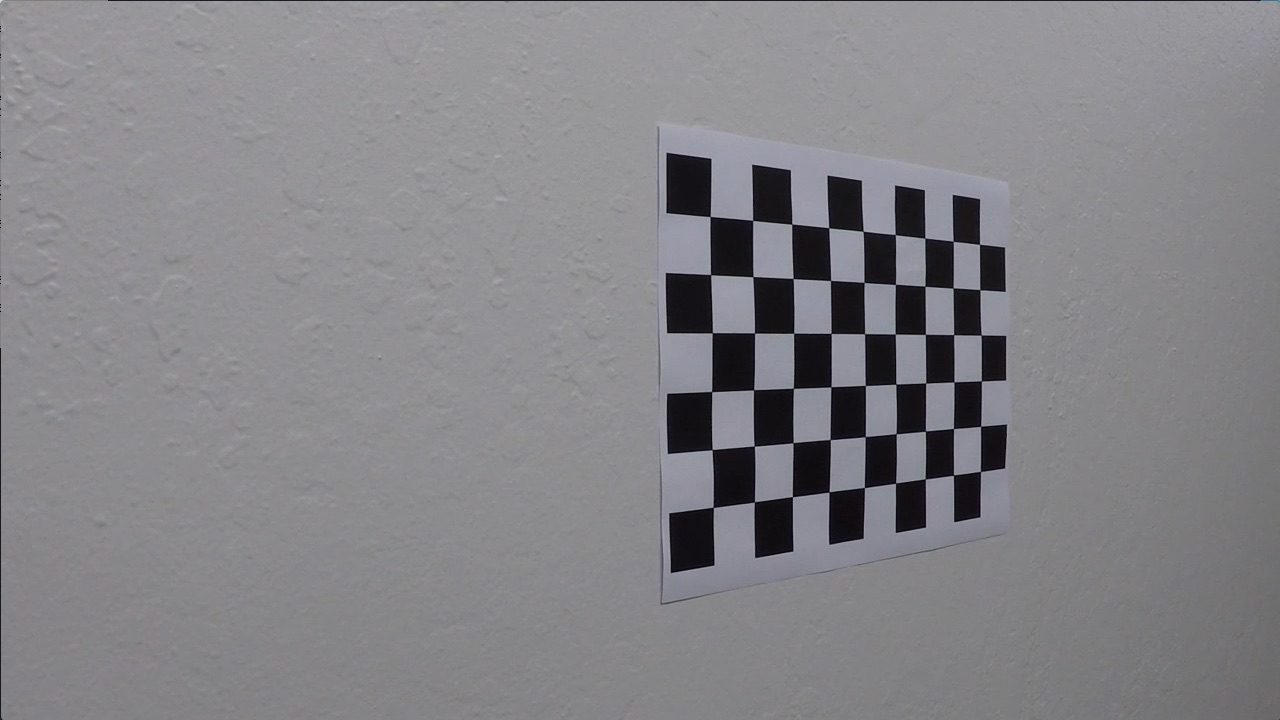

Here is the original “calibration8.jpg”:

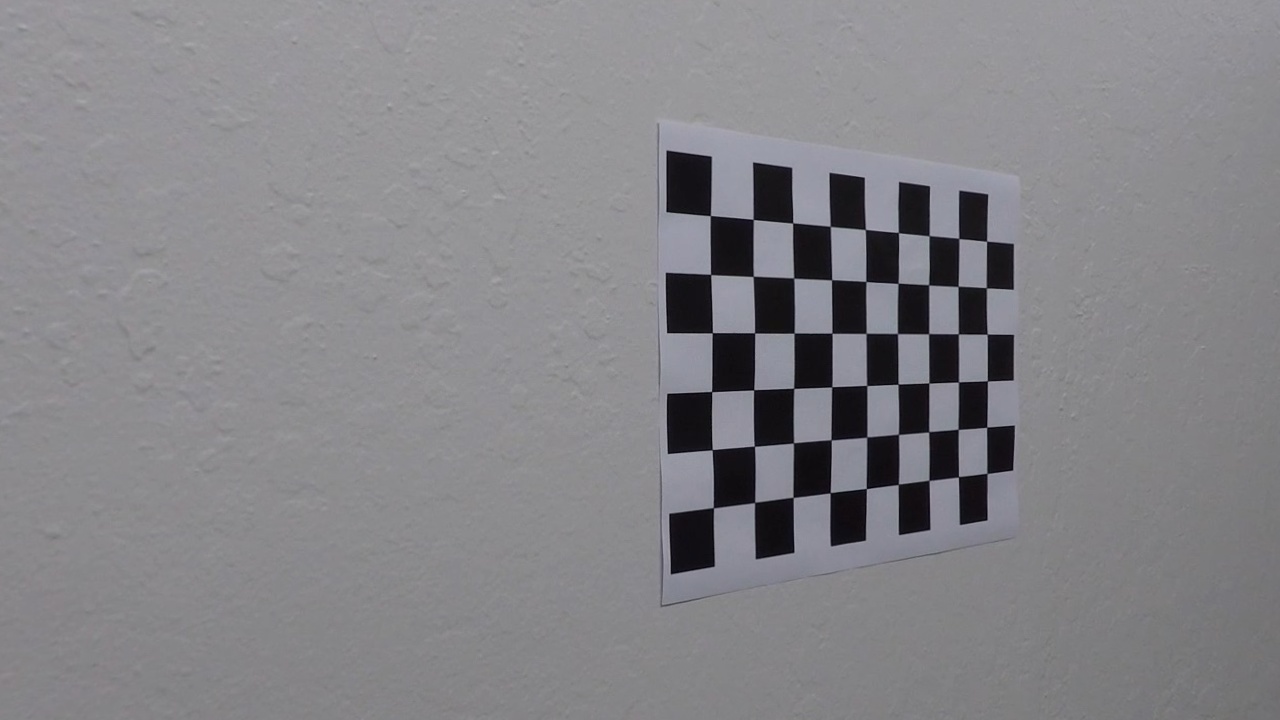

And here is the undistorted image:

Pipeline (single images)

1. Provide an example of a distortion-corrected image.

Here is the original “straight_lines1.jpg”:

And here is the undistorted image:

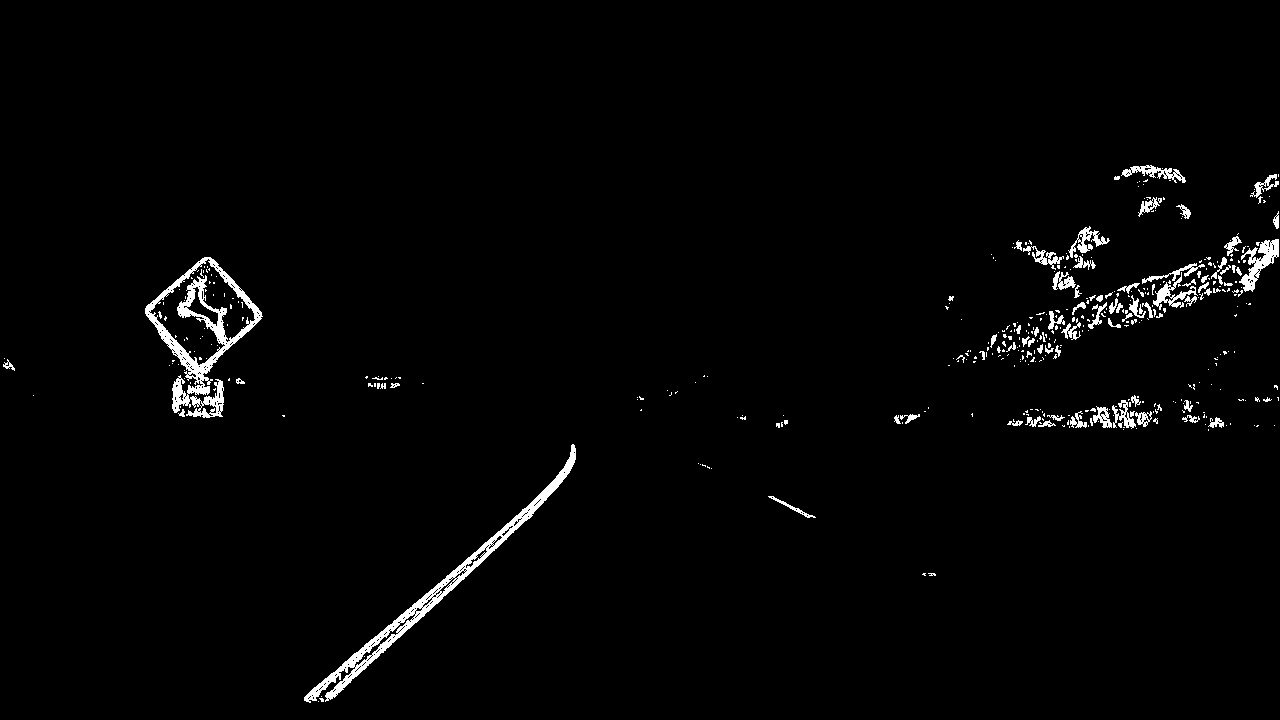

2. Describe how (and identify where in your code) you used color transforms, gradients or other methods to create a thresholded binary image. Provide an example of a binary image result.

I used a combination of color and gradient thresholds to generate a binary image. More specifically, I thresholded the gradient of the L channel (from HLS) and the pixel values of the L channel (from LUV) and the B channel (from LAB). I also applied a mask, zeroing out the left, center, and right regions of the image.

Here’s an example of my output for this step:

3. Describe how (and identify where in your code) you performed a perspective transform and provide an example of a transformed image.

See Lane.get_perspective() and Perspective transform constants

For my perspective transform, I specified 4 corners of a trapezoid (as src) in the undistorted original images and I specified the 4 corresponding corners of a rectangle (as dst) to which they are mapped in the perspective image. After inspecting an undistorted image, I specified the parameters as follows:

img_size = (1280, 720)

src = [[ 595, 450 ]

[ 685, 450 ]

[ 1000, 660 ]

[ 280, 660 ]]

src = [[ 300, 0 ]

[ 980, 0 ]

[ 980, 720 ]

[ 300, 720 ]]

Here is the undistorted “straight_lines1.jpg”:

And here is a perspective view of the same image (with lines drawn):

4. Describe how (and identify where in your code) you identified lane-line pixels and fit their positions with a polynomial?

See Lane.fit_lines()

Here are the steps I followed for fitting the lane lines.

- Get the thresholded binary image and apply the perspective transform. See

Lane.get_binary_perspective()

- Find the sets of points to which I’ll fit a polynomial in order to get the left and right lane lines. If there was a prior image (i.e.,

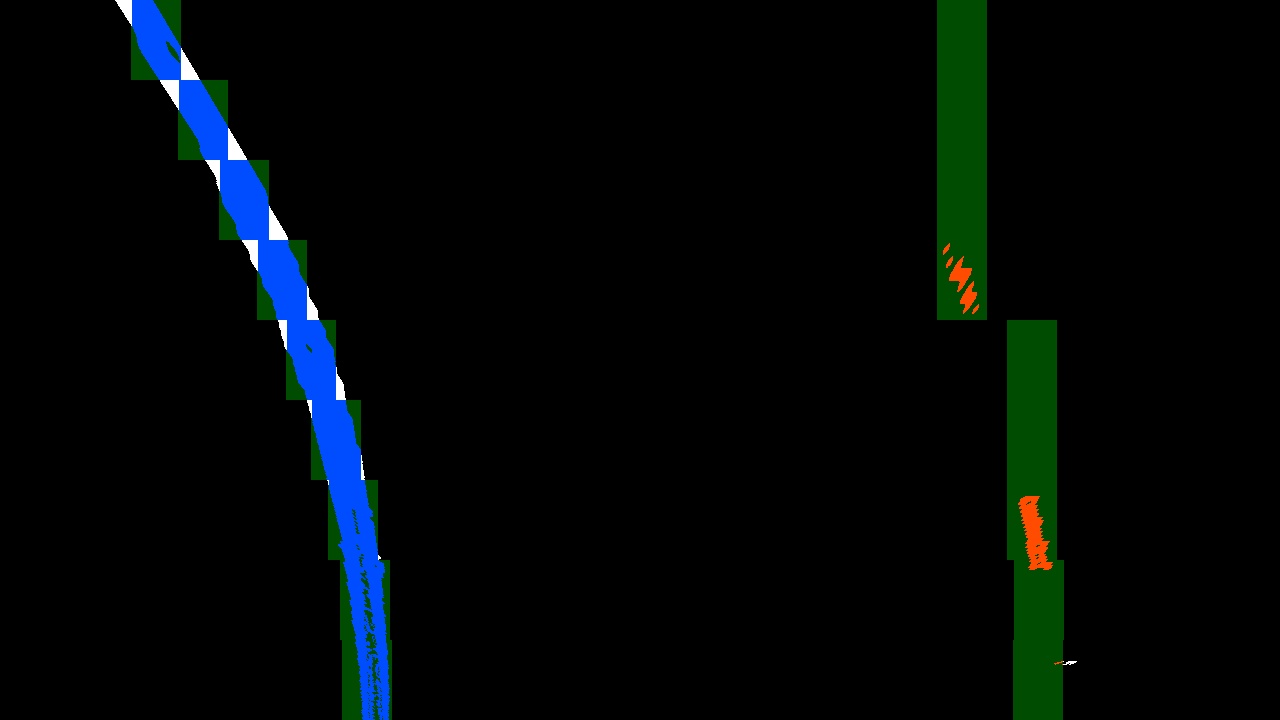

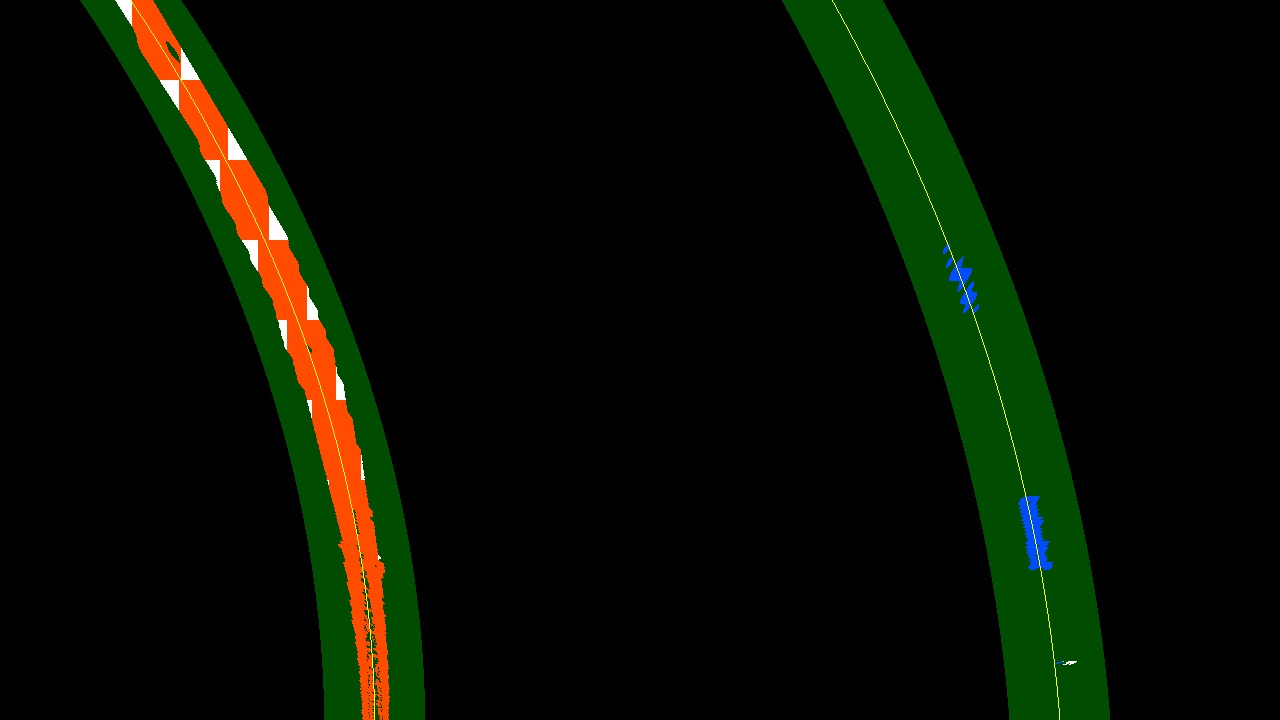

Laneobject), then I used the points within +/-margin_priorof the previous fitted line; otherwise, I used a sliding window search (seeLane.find_window_centroids(). Here is an example plot of the points used to fit the lane lines (in red and blue) found viaLane.find_window_centroids():

-

Fit polynomials to the sets of points found in the previous step. My code accepts a parameter

dthat specifies the degree of the polynomials, but I only experimented withd = 2. I fitted polynomials for both pixels and meters as the measurement units. In the next step, I did some post-processing on these polynomials. -

Post-process the fitted polynomials. If the image (i.e.,

Laneobject) was part of a sequence, I averaged the polynomial coefficients over the lastn=3polynomial fits.

Here is an example of the final fitted lane lines, which I generated using Lane.plot_lines_perspective():

5. Describe how (and identify where in your code) you calculated the radius of curvature of the lane and the position of the vehicle with respect to center.

See Lane.get_rad_curv() and Lane.get_offset()

I calculated the left and right radii of curvature using the formula:

r = (1 + (first_deriv)**2)**1.5 / abs(second_deriv)

For the overall/center radius of curvature, I took whichever polynomial was fitted to more unique y values and used its radius of curvature (after adding/subtracting half of the lane width).

I calculated the offset of the vehicle by finding the x values (in meters) of the left and right polynomials at y = (rows - 1) * ym_per_pix and then took the difference from the center column of the image, converted to meters: center = cols / 2 * xm_per_pix.

6. Provide an example image of your result plotted back down onto the road such that the lane area is identified clearly.

Here is an example of the lane lines plotted on the undistorted road image for “test2.jpg”:

Pipeline (video)

1. Provide a link to your final video output. Your pipeline should perform reasonably well on the entire project video (wobbly lines are ok but no catastrophic failures that would cause the car to drive off the road!).

Here’s a link to my video result

Discussion

1. Briefly discuss any problems / issues you faced in your implementation of this project. Where will your pipeline likely fail? What could you do to make it more robust?

Challenges

Getting good quality binary images was more difficult than I expected, and I still feel that there is room for improvement on this aspect of the project. The difficulty was detecting the lanes but not detecting other edges, such as those due to shadows or the edge of the road.

Where the pipeline struggles

As can be seen in the video, this pipeline struggles when the car bounces and when the slope of the road changes. This is because the hard-coded perspective transform parameters are no longer correct. A better approach might involve finding the vanishing point of the images; that is, the point to which all straight lines converge.

Where the pipeline might fail

A couple scenarios that could cause this pipeline to fail include:

-

The car is not driving within the lane lines. This pipeline assumes that the car is driving more or less in the center of the lane. It would likely fail if the car were to change lanes because the model is simply not designed to handle a complex case such as this, especially considering that I used a mask which zeroed out the left, right, and center of the image in order to obtain the binary thresholded image.

-

The binary thresholding portion of the pipeline fails to eliminate edges which are not lane lines. While I did not work much with the challenge video, a previous version of my pipeline performed poorly on that video due to the line in the middle of the lane between the light and dark asphalt. The model might struggle with words or signs painted on the road, such as “SLOW” or a crosswalk. In order to accurately fit a polynomial to the lane lines, we need to have good data points with minimal noisy data points.